Authors:

(1) Nowshin Nawar Arony;

(2) Ze Shi Li;

(3) Bowen Xu;

(4) Daniela Damian.

Table of Links

Inclusiveness Concerns in Different Types of Apps

Inclusiveness Across Different Sources of User Feedback

Automated Identification of Inclusiveness User Feedback

4 METHODOLOGY

In our study we collected user feedback from three popular platforms and employ Socio-Technical Grounded Theory (STGT) [9] method. In particular, we adhere to the STGT data analysis method that includes open coding, constant comparison, and basic memoing. We then proposed a model that can automatically classify inclusiveness related user feedback.

4.1 Data Collection

We collected data from three popular online sources of user feedback: Reddit, Google Play Store, and Twitter. We chose the three sources as prior studies have successfully found software relevant information, such as bugs and features from these channels [23], [24], [25]. Reddit is popular for having a high character limit that allows users to engage in elaborate discussions. A single Reddit post has the room to provide extensive details about a particular issue that otherwise is not available in comparable feedback sources. Google Play Store offers app users to leave reviews about any app, which is useful for software organizations to elicit concerns regarding any particular app. In contrast, Twitter is well known as one of the most popular social media platforms, which supports short form textual user discourse about any particular topic. Twitter has been shown to provide requirement relevant information for organizations to analyze [28].

To compile our data, we collect a list of 50 of the most popular apps from Google Play Store that must have reviews between Jan 1, 2022, and Dec 31, 2022. These apps have a provenance from various domains and are actively installed by a diverse group of users from across the world. This list is used to scrape the data for Reddit and Twitter as well, thereby giving us a unified range of apps. For Reddit, we use a publicly available dataset [29] and obtain over 382K Reddit posts. Next, we collect 9 million app reviews from Google Play using the library Google Play Scraper [1] . Lastly, we use the snscrape library [2] to scrape 841,788 discussions from Twitter.

Once we collect all the data, we filter the original data by removing any empty posts. Additionally, we filter out any post that has less than three words as we believe that posts that cannot satisfy this criterion most likely do not provide meaningful information. We are left with over 3.7 million app reviews, 824 thousand tweets, and 359 thousand Reddit posts.

4.2 Qualitative Analysis

To analyze the data, we used methods from Socio-Technical Grounded theory (STGT) [9]. STGT is a modern Grounded theory approach well suited for research in Software Engineering (SE); it enables a more focused study through a lean (i.e., lightweight ) literature review and the utilization of new data collection methods, such as data mining techniques from online sources. STGT can be applied in either its full form, which produces novel theories, or in a more limited manner, utilizing the basic data analysis techniques to establish important categories or initial hypotheses. Due to the exploratory nature of our study, we opted for the second option to analyze online user feedback. Our goal was to develop an understanding of inclusiveness related to user feedback from the end user perspective.

The STGT basic analysis steps consist of open coding, constant comparison, and basic memoing. For the open coding, we first performed random sampling to obtain a large amount of data from all 50 apps and within each of the three sources. We used a random sampling technique with a 99% confidence level and a 2% margin of error, which resulted in 4,113 samples from Reddit, 4,156 from Google Play Store, and 4,140 from Twitter.

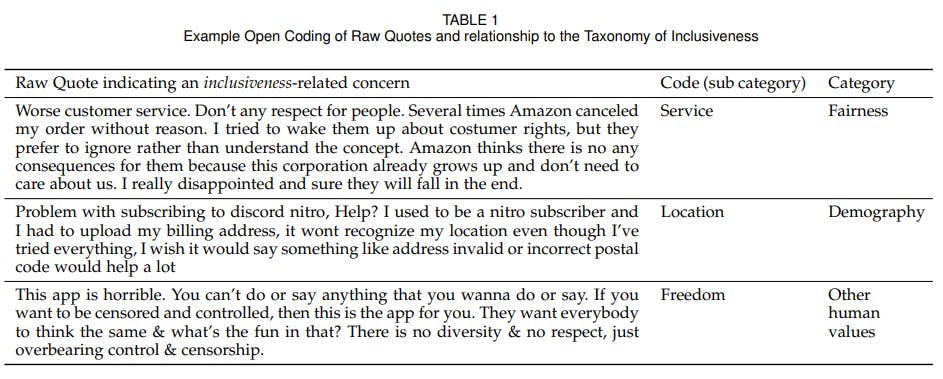

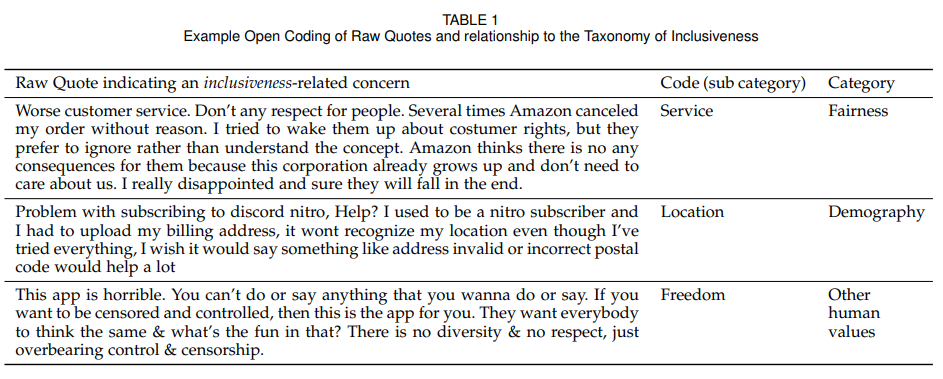

To guide our coding technique, in line with the STGT, we conducted a lightweight literature review in preparation for the study to identify the existing understanding of inclusiveness in software engineering (as outlined in the Related Work section). We refer to the definition of inclusiveness from Khalajzadeh et. al [7] and establish the term as “any user concern related to the inclusion, exclusion or discrimination toward a specific individual or group of users while using the software.” Based on this definition, two members of the research team analyzed the randomly selected data and assigned a binary inclusiveness or non-inclusiveness label to each post. When a post was given an inclusiveness label, we further included a code based on the characteristics of the feedback. For example, the quote “Worse customer service. Don’t any respect for people. Several times Amazon canceled my order without reason. I tried to wake them up about costumer rights, but they prefer to ignore rather than understand the concept. Amazon thinks there is no any consequences for them because this corporation already grows up and don’t need to care about us. I really disappointed and sure they will fall in the end” was first labelled as inclusiveness related. Additionally, the code service was assigned to it as the user expressed frustration about feeling excluded as they do not receive customer support. Table 1 illustrates examples of the labelling process.

Any time a new code emerged for the inclusivenessrelated user concerns, the two human annotators met and discussed the implications of the new code. We used constant comparison method to compare the derived codes across all three user feedback sources and to uncover the underlying patterns and relations between the codes. Our analysis yielded 18 codes under inclusiveness, and which we further grouped into 6 categories. We employed the basic memoing technique to document the reflections on the emerging codes and categories. A significant part of STGT, memoing is encapsulates the researchers’ thoughts, enabling a systematic development of categories from the initial codes.

Another important aspect of STGT is theoretical saturation, which means “when data collection does not generate new or significantly add to existing concepts, categories or insights, the study has reached theoretical saturation” [9]. Therefore, the two human annotators continued the labelling process until no new categories emerged and code definitions became stable (i.e.saturation). During this process, our initial random sample became insufficient to reach saturation, and we further randomly sampled additional posts from all three sources and analyzed them until we reached saturation for each category of our analysis. We

stopped labelling when we observed no additional insights emerging from the last 200 posts that we coded from Reddit and Google Play Store. For Twitter, due to the presence of irrelevant discussions in the data, saturation was reached after we coded 500 additional posts.

In total, we labelled 4,647 discussions from Reddit, 4,949 from Google Play Store, and 13,511 from Twitter.

We present categories from our analysis in the form of a two-layer taxonomy of inclusiveness, with these six categories forming the primary layer and the 18 codes distributed within each category as a sub-category. The labelled data and memoing can be found in the replication package [30]. In Section 5, we describe the taxonomy of inclusiveness with detailed examples.

4.3 Automated Analysis

To analyze the effectiveness of automatically identifying inclusiveness related user feedback, we experimented with a number of classifiers that can automatically classify inclusiveness and non-inclusiveness from the user feedback dataset. Recall in Section 4.2 we collated a large human annotated set of user feedback. This labelled set served as a ground truth for us to train a classifier.

We studied five widely recognized pre-trained large language models (LLMs) that have demonstrated effectiveness in text classification. The five models are: GPT2 (Generative Pre-trained Transformer 2) [31], BERT (Bidirectional Encoder Representations from Transformers) [32], RoBERTa (Robustly Optimized BERT Approach) [33], XLMRoBERTa (Cross-lingual Language Model - Robustly Optimized BERT Approach) [34], and BART (Bidirectional and Auto-Regressive Transformers) [35]. Previous work classifying requirements relevant information from text has achieved satisfactory results using these five models [36]. Therefore, we opt for the same set of LLMs.

From the labelled dataset obtained through STGT, we prepared a balanced set of training data, as an imbalanced dataset can lead machine learning models to prioritize the major categories and diminish the minor categories [37]. We report the performance of the classifiers and use 4 widely used evaluation metrics: precision, recall, accuracy, and F1- score.

In the sections that follow, we present the empirical results of our study.

[1] https://github.com/JoMingyu/google-play-scraper

[2] https://github.com/JustAnotherArchivist/snscrape

This paper is available on arxiv under CC 4.0 license.